Due to the observation samples for the agent’s states possibly being too big for regular Q-learning methods, I decided to use Deep Q Networks (DQN) as my algorithm of choice. Additionally, to keep within scope, I’ve decided not to create the algorithm from scratch and instead use an existing library.

Problems and solutions

Despite these changes the concept of having an AI that can play an video game still remain the same. However, instead of using Q-learning it is now Deep Q Networks and instead of Snakes (1976) it’s Asterix (1983).

DQN is a combination of Reinforcement Learning and Neural Networks, where instead of using weights to dictate which node should fire, it instead uses the quality value generated by the Q-equation. In addition, instead of having a Q-table the output nodes on the output layer are where the action will be stored and picked from (N. Yannakakis and Togelius 2018).

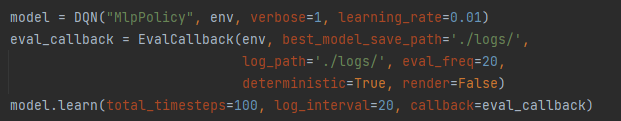

My library of choice was stable baselines3 since I’ve had experience with it in the past when using DQN via lectures & workshops and it is designed to work with environment libraries such as OpenAI Gym making the cooperation between both systems easier (Engelhardt et al. 2018). Below in fig 1 is a screenshot of the DQN model.

As you can see from fig 1, the model has parameters that I can use to adjust the number of steps it performs during its training process and how often it will log its progress. In addition, by using the event_callback parameter I can allow it to save and store data that I can then display later on a graph.

However, when using this function, it stores the data as a npz file type which was a file type I had never used or heard of before. Fortunately, NumPy is capable of converting most file types into csv files (Rodrigo Rodrigues 2018). This solution works very well and removes any problems I have when using the results.

When using DQN on my home computer I received an error and after some research it became clear that there wasn’t enough space on my computer to process all the data during its run time. In addition, it was also recommended that I use the 64 bit version of python rather than the 32 bit (E. M 2021). The error is shown below in fig 2.

This problem is quite small and shouldn’t impact the scope of the AI as I can simply move the project to use the 64 bit version of Python. Additionally, to counteract this small error I decided try and run this on the university’s computer as it has more memory and the 64 bit version of Python.

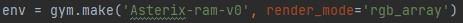

After trying the DQN AI on the university computer I was surprised to experience the same problem, however after some experimentation I replaced the current environment version of Asterix with it’s RAM counterpart which made it work. It works by instead of it monitoring every pixel in the environment, it instead extracts the data from the computer’s RAM memory to make decisions on what to do (Anonymous 2016). This allowed me to run the Atari environment while still using DQN as the agent’s AI. A screenshot of the code that I used for the RAM environment is show below in fig 3.

Once this problem had been taken care of I began a test to see if the agent could be trained and store its data. I set the agents parameters to perform 100 steps of training and log its progress ever 20 steps. As you can see from fig 4 and 5 both the data from each step was recorded and converted to a csv file.

I also found that to speed up the training process, I can change the render mode from ‘human’ to ‘rgb_array’, by doing this a window won’t appear and the environment will be running at a faster rate, too fast for humans to perceive. Having the render mode set to ‘human’ means you can watch the AI play the environment but at a much slower pace (Brockman, 2016).

Further enquires

Now that I have a basic set up of the AI, I wonder how far could the AI preform and if it could be used in a 3D environment. For example, could the AI play a 3D game like Dark Souls and be able to fight enemies successfully. Of course this would a lot longer than 5 weeks to make and I theories two additional systems would be needed, one to set up to extract the correct from the RAM and another one to allow the AI to interact with the game itself. That being said I think this could be possible since machine learning has been used in the past to play a first person shooter (Khan et al. 2020).

Reflection and conclusion

Upon looking into DQN and resolving this error I have found that it is quite simple to set up and train a DQN AI, which I feel is very fortunate, as time is coming close. However, when picking the environment I need to be careful how the AI will collect the data as the computer may not be powerful enough. Going further, I intend to have this data displayed on a line graph showing the results per evaluation call back, to show the progression of the AI, throughout each iteration. This will be done by having the episode total displayed on the x-axis and the reward total displayed on the y-axis to show the progress through out the iteration.

Bibliography

Asterix. 1983. Atari, inc, Atari, inc.

Asynchronous Deep Q-Learning for Breakout with RAM Inputs. 2016.

Brockman, G. et al., 2016. Openai gym. arXiv preprint arXiv:1606.01540.

ENGELHARDT, Raphael, Moritz LANGE, Laurenz WISKOTT and Wolfgang KONEN. 2018. Shedding Light into the Black Box of

Reinforcement Learning.

E. M, Bray. 2021. ‘Python – Unable to Allocate Array with Shape and Data Type’. Available at: https://stackoverflow.com/questions/57507832/unable-to-allocate-array-with-shape-and-data-type. [Accessed Feb 2,].

KHAN, Adil et al. 2020. ‘Playing first-person shooter games with machine learning techniques and methods using the VizDoom Game-AI research platform’. Entertainment Computing, 34, 100357.

N. YANNAKAKIS, Georgios and Julians TOGELIUS. 2018a. Artificial Intelligence and Games. Springer.

Rodrigo Rodrigues. 2018. ‘Numpy – how to Convert a .Npz Format to .Csv in Python?’. Available at: https://stackoverflow.com/questions/21162657/how-to-convert-a-npz-format-to-csv-in-python. [Accessed Feb 4,].

Snake. 1976. Gremlin Interactive, Gremlin Interactive.

Figure List

Figure 1: Max Oates. 2022. screenshot of AI model.

Figure 2: Max Oates. 2022. error show more space required to run program.

Figure 3: Max Oates. 2022. example of code used to set up environment.

Figure 4: Max Oates. 2022. returned data from agent training.

Figure 5: Max Oates. 2022. data converted into a csv file.